Welcome to this exploration of the world of recommendation engines, where we’ll dive into the crucial aspect of modeling recommendation problems and the profound impact they can have on the results. In this blog post, I’ll share insights from my journey and experiences, highlighting instances where the right modeling strategy made all the difference.

But first, let’s be clear: The title, “Recommendation built by engineers vs. Recommendation built by scientists,” isn’t intended to pit these two groups against each other. It’s a friendly nod to the importance of precision and rigor in modeling, regardless of your professional background. So, no offense to engineers – you’re essential players in this recommendation game!

Now, let’s embark on this enlightening journey where we’ll discover why getting the modeling right is the key to unlocking the true potential of recommendation engines.

Evaluation: The Cornerstone of Recommendation Engine Development

In recommendation engines, evaluation stands tall as the linchpin of our efforts, even in the offline world. It’s the litmus test, the verdict, and the most critical judge of success in our work. Whether employing cutting-edge deep learning models or resorting to random item selection for recommendations, one thing remains constant – our models must face the scrutiny of evaluation metrics we define.

These metrics aren’t just arbitrary numbers; they are purpose-built benchmarks meticulously designed to gauge the effectiveness of our algorithms. In essence, they are the yardsticks by which we measure the performance and impact of our recommendation systems.

Now, let’s pause to reflect on the two main arenas where evaluation occurs: offline and online.

Online Evaluation: The Real-World Crucible

Online evaluation is akin to the live performance of our recommendation systems in the real world. It’s where our algorithms engage with users, make recommendations, and, most importantly, collect invaluable data on their effectiveness. A/B testing mechanisms are our trusty sidekicks in this realm, allowing us to pit different recommendation strategies against each other in a controlled and actionable manner.

Online evaluation is like a dynamic stage where we witness our algorithms in action, and it’s undoubtedly an indispensable tool in our arsenal. It provides real-time insights into how our recommendations impact user behavior, conversion rates, and engagement. Ultimately, it’s the endgame, the moment of truth when our hard work pays off – or doesn’t.

Offline Evaluation: The Wise Counsel

But let’s pay attention to the wisdom of offline evaluation. This is where we take a step back from the real-time hustle and bustle and subject our models to a rigorous, controlled examination. It’s like a simulation where we put our algorithms through their paces in a controlled environment. Here, we can fine-tune and refine our recommendation strategies before they face the crucible of the live environment.

Offline evaluation metrics provide valuable insights, often serving as our compass during the development phase. They help us detect early issues, fine-tune parameters, and optimize our models before they embark on their online journey. Indeed, offline evaluation enables us to fail fast to find solutions faster.

In conclusion, whether it’s the high-stakes performance of online evaluation or the meticulous preparation of offline evaluation, both are indispensable components of recommendation engine development. So, engineers and scientists alike, take note: regardless of your background, when it comes to building recommendation engines, evaluation is where the rubber meets the road, and success is measured in metrics that we, ourselves, define.

The Quest for Ground Truth in Recommendation Engines: Unraveling the Mystery

In the enchanting world of recommendation engines, where offline evaluation metrics play a pivotal role, we encounter a unique challenge—defining the elusive concept of “ground truth.” Unlike some classic machine learning tasks, where the ground truth emerges naturally from the problem definition, the journey to discover what constitutes the ground truth in recommendation systems is fascinating and often contentious.

Consider, for a moment, the classic machine-learning problem of object detection in computer vision. In this context, we have a clear problem definition: to detect specific objects within images. The ground truth is self-evident—the presence or absence of these objects in our tagged and labeled dataset. We aim to maximize accuracy, aligning our model’s predictions with this straightforward ground truth.

Now, let’s shift our focus to recommendation problems. The waters get murkier here, and the ground truth becomes an intriguing puzzle. What, indeed, is the ground truth in recommendation systems? This question is where the debates often ignite among researchers and practitioners.

The first step in this captivating debate is defining what makes a recommendation “good.” What criteria do we use to distinguish between a recommendation that hits the mark and one that misses it entirely? The answer isn’t always crystal clear and can vary widely depending on the context, the platform, and even individual user preferences.

So, when we embark on the journey of offline evaluation in recommendation engines, we strive to understand and quantify these subtle dimensions of user preference and satisfaction. It’s a quest where the definition of success is as dynamic as the users themselves, and it’s a testament to the complexity and richness of the world of recommendation systems.

Diving Deeper: Exploring Three Recommendation Types Through Personal Experience

Let’s embark on a journey into the world of recommendation engines, guided by personal experiences that showcase the nuances of three distinct recommendation types: Similar Recommendation, Complementary Recommendation, and Home Page Recommendation. These real-world examples shed light on the intricacies of modeling and the importance of crafting tailored objective functions.

1. Similar Recommendation: Finding the Perfect Match

Imagine a recommendation engine that excels in offering a list of products similar to the one initially sought by the user. I’ve encountered this scenario countless times in my own career, where precision and relevance are paramount. Users rely on these recommendations to discover alternatives that closely align with their preferences, making the choice even more critical.

The challenge here lies in defining what “similar” truly means. Is it based on product features, user behavior, or a combination? Crafting the right objective function to optimize similarity is where the artistry of recommendation modeling truly shines.

2. Complementary Recommendation: Expanding User Horizons

A complementary recommendation is a different beast altogether. Here, the goal is to provide users with a list of items that complement their main product or purchase. It’s about enhancing their shopping experience, suggesting items they may have yet to consider initially but would find valuable.

As someone who’s navigated this landscape, I can attest that defining “complementary” isn’t straightforward. It hinges on understanding user preferences and their broader shopping journey. The objective function here is a complex interplay of user engagement, diversity, and serendipity – all of which require careful modeling to get right.

3. Home Page Recommendation: Anticipating User Desires

Home Page Recommendation is a fascinating facet of recommendation engines, where the platform’s landing page becomes a stage for predictive modeling. Here, the goal is to guess what users might be interested in purchasing during their subsequent interactions.

My own experiences have revealed the importance of anticipation and adaptability in this domain. The objective function may involve a blend of relevance, novelty, and user engagement. The challenge lies in keeping the home page fresh and enticing, offering a mix of familiar favorites and enticing discoveries.

In all these recommendation types, what’s evident is that there’s no one-size-fits-all solution. The key to success is crafting objective functions aligning with each recommendation scenario’s unique goals and nuances. It’s not about plugging in predefined metrics but embracing a flexible mindset, leveraging data-driven insights, and understanding user behavior’s intricacies. As we navigate this world of recommendation engines, we find that the magic happens when we tailor our modeling strategies to create personalized, engaging, and valuable user experiences.

The mentioned definition for recommendation engines may be enough for those needing a definition to find a solution. No matter how accurate the is or not. Those who I called here engineers. Suppose that we provide a model that finds products of an e-commerce based on visual descriptors or learn the sequence of products that users see and predict the next item of sequence as a home page recommendation. They are recommendation, isn’t they? But the bigger question here is: are they the best possible solution? Do they address the actual needs of businesses and users? That’s why I believe we should first model the true need and problem before engineering the solution.

Modeling the Three Recommendation Types: A Deep Dive into the Math

Let’s roll up our sleeves and delve into the mathematical underpinnings of modeling three intriguing recommendation types: Similar Recommendation, Complementary Recommendation, and Home Page Recommendation. We’ll kick things off by breaking down the Complementary Recommendation model showcasing how it can be structured and evaluated.

1. Complementary Recommendation

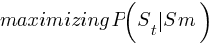

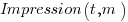

In Complementary Recommendation, the aim is to find products that complement the main product users are viewing. This can be formulated as the following equation:

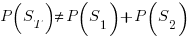

Here,  represents the probability of selling the target product t, and

represents the probability of selling the target product t, and  denotes the probability of selling the main product m. The objective is to identify the product (or products) that maximizes the conditional probability of selling the target product alongside the main one.

denotes the probability of selling the main product m. The objective is to identify the product (or products) that maximizes the conditional probability of selling the target product alongside the main one.

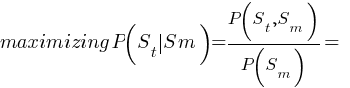

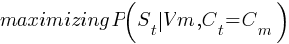

For offline evaluation and dataset creation, we need to calculate the probability function:

Breaking it down further:

# represents the number of items sold for product m.

represents the number of items sold for product m.

# is the number of times product m has been shown to the user.

is the number of times product m has been shown to the user.

# indicates how often a session (user) purchased both items m and t.

indicates how often a session (user) purchased both items m and t.

# quantifies how frequently both product t,m has been showed for a session (user) during its search and exploration.

quantifies how frequently both product t,m has been showed for a session (user) during its search and exploration.

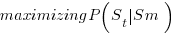

essentially serves as the conversion rate of the product m for considering all possible target items. Therefore, maximizing

essentially serves as the conversion rate of the product m for considering all possible target items. Therefore, maximizing  boils down to identifying the product with the highest combined probability, represented as

boils down to identifying the product with the highest combined probability, represented as

This modeling approach offers a systematic way to determine complementary products that can enhance users’ shopping experiences. By optimizing this equation, we can fine-tune our recommendations to align with user preferences, ultimately driving sales and satisfaction.

2. Similar Recommendation

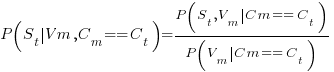

In the fascinating realm of “Similar Recommendation,” the quest is to identify products that closely resemble the one a user views. This can be masterfully modeled through the following equation:

Here,  represents the probability of selling the target product t),

represents the probability of selling the target product t),  is the probability of product m has been viewed (or clicked), and

is the probability of product m has been viewed (or clicked), and  and

and  denote the category IDs of the target products t,m. Any arbitrary similarity function can replace it. The condition

denote the category IDs of the target products t,m. Any arbitrary similarity function can replace it. The condition  ensures that both products fall into the same category.

ensures that both products fall into the same category.

In essence, this modeling approach seeks to maximize the conditional probability of selling the target product t given that a user has viewed product m and that both products share the same category.

Like in Complementary Recommendation, we’re diving into a mathematical framework to make these recommendations smarter and more user-focused. It’s about understanding user behavior and preferences and crafting recommendations that align seamlessly with their interests and choices.

=

=

for products that

Breaking it down further:

# quantifies how frequently a user viewed product m and bought product t.

quantifies how frequently a user viewed product m and bought product t.

# indicates how often a session (user) clicked (viewed) on item m

indicates how often a session (user) clicked (viewed) on item m

By employing this equation, we can systematically identify similar products within the same category, offering users options that closely match their current interests. It’s a powerful approach that enhances user experience and engagement while driving conversions.

Addressing Key Questions in Recommendation Modeling

Before we venture into modeling Home Page Recommendations, let’s take a moment to address two essential questions that arise when employing the probabilistic approach we discussed earlier.

1. The Need for an Offline Dataset:

You might wonder, if we’ve correctly formulated the problem using the probability-based model, why bother with an offline dataset and inference models for complementary items? After all, we can calculate the probabilities and select the highest one, right? While this assumption is theoretically sound, the real world presents a practical challenge.

In a vast e-commerce landscape with millions of items, realistically, only a fraction of products will have sufficient data points to calculate these probabilities accurately. Data scarcity is a significant issue for many niche or less popular items. Therefore, we must create an offline dataset, following the model’s definition as a validation set, and evaluate our models on this data. This allows us to generalize our recommendations to products with limited data, making the recommendation engine robust and valuable across the entire product catalog.

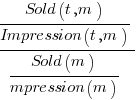

2. Interpret  and

and  :

:

What’s the difference between maximizing  and maximizing

and maximizing  ? To clarify,

? To clarify,  represents the Conversion Rate (CR) of product t, calculated as the number of sold items t divided by the number of impressions for item t. On the other hand,

represents the Conversion Rate (CR) of product t, calculated as the number of sold items t divided by the number of impressions for item t. On the other hand,  represents the Click-Through Rate (CTR) of item t, indicating how frequently users click on item t when it’s presented.

represents the Click-Through Rate (CTR) of item t, indicating how frequently users click on item t when it’s presented.

The key distinction lies in the objective we aim to achieve. If the business goal is primarily to drive sales and conversions, maximizing  is the priority. However, there are scenarios where we want to encourage user exploration and engagement rather than immediate purchases. In such cases, maximizing

is the priority. However, there are scenarios where we want to encourage user exploration and engagement rather than immediate purchases. In such cases, maximizing  becomes the focus.

becomes the focus.

For instance, if we want users to click on recommended items, explore additional features, or engage more with the platform, optimizing for  might be more suitable. This objective aligns with broader user interaction and engagement goals beyond immediate conversions.

might be more suitable. This objective aligns with broader user interaction and engagement goals beyond immediate conversions.

By making this distinction, we tailor our recommendation strategies to suit the specific aims of the business, allowing us to fine-tune our approach for different contexts and user behaviors.

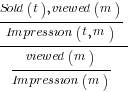

Dataset Structure:

Let’s consider the dataset structure as follows:

For each main product  , we construct a row that lists a set of products that maximize the probability function. This structure provides a snapshot of potential recommendations for each main product.

, we construct a row that lists a set of products that maximize the probability function. This structure provides a snapshot of potential recommendations for each main product.

Example:

For Similar Recommendations, we create rows like this:

Here, each  represents a main product, and

represents a main product, and  are products that are considered similar to

are products that are considered similar to  sorted by their relevant probability function

sorted by their relevant probability function

For Complementary Recommendations, the structure remains the same, but the definition changes:

Now, Pi represents a main product, and  are products that complement

are products that complement  sorted based on the probability function

sorted based on the probability function

We can efficiently manage and evaluate recommendations for both types by adopting this consistent dataset structure. The key distinction lies in how we populate these rows: for Similar Recommendation, we identify products similar to the main item, while for Complementary Recommendation, we pinpoint items that complement the main product.

This unified approach streamlines the process of dataset creation and management, making it easier to handle the complexity of recommendation engines. It ensures we’re well-equipped to assess and fine-tune our recommendation models, optimizing them for various scenarios and user needs.

Home Page Recommendation

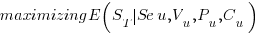

In the intricate landscape of Home Page Recommendation, where user interactions span search queries, item views, purchases, likes, and comments, we aim to maximize a nuanced objective function:

Where

represent queries searched by user u

represent queries searched by user u

represents products viewed by user u

represents products viewed by user u

represent products purchased by user u

represent products purchased by user u

represents products commented (liked) by user u

represents products commented (liked) by user u

This expression signifies our endeavor to maximize the expectation of selling a curated list of items  for a user u, considering their respective actions

for a user u, considering their respective actions  . It’s an approach that considers a user’s entire journey and aims to make the home page a more engaging and relevant space.

. It’s an approach that considers a user’s entire journey and aims to make the home page a more engaging and relevant space.

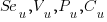

However, it’s worth noting that the objective function can be tailored to various scenarios and business goals. For instance, the primary objective is to maximize user engagement and interaction. We can adjust the function to  , focusing on users’ expectations to click on the listed items.

, focusing on users’ expectations to click on the listed items.

Moreover, we can introduce additional criteria to fine-tune our recommendations, such as  , which means that we not only want to maximize the objective for all users but specifically for certain user segments u∊S who are members of those segments. This level of granularity allows for highly targeted and personalized recommendations.

, which means that we not only want to maximize the objective for all users but specifically for certain user segments u∊S who are members of those segments. This level of granularity allows for highly targeted and personalized recommendations.

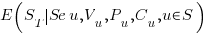

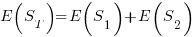

Now, let’s discuss the key distinction between maximizing expectation and finding the highest probabilities. Understanding this difference is crucial for achieving more precise modeling. For instance, when we aim to maximize  , which is selling a list of items.

, which is selling a list of items.

For better understanding, let’s assume a scenario: we might assume that the probability of selling item t  and another item

and another item  are independent. In other words, the probability of selling an iPhone when recommended alongside a Samsung phone is the same as when recommended alongside an LG phone. Thus, we might simplify the problem as follows:

are independent. In other words, the probability of selling an iPhone when recommended alongside a Samsung phone is the same as when recommended alongside an LG phone. Thus, we might simplify the problem as follows:

+ … +

+ … +  where 1,2,… t ∊ T

where 1,2,… t ∊ T

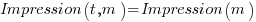

This independence assumption can significantly impact the results and depends on our overall strategy. However, it’s important to recognize that expectations are not the same as probabilities:

+ … +

+ … + where 1,2,… t ∊ T

where 1,2,… t ∊ T

Choosing whether to make the independence assumption or not should be a conscious decision driven by the specifics of your recommendation strategy and the impact you want to achieve. It underscores the importance of understanding the underlying mathematics to make informed modeling choices.

Modeling Home Page Recommendations with expectations offers a dynamic and flexible approach, allowing us to fine-tune recommendations based on business goals and user engagement. It’s a reminder that, in recommendation engines, the path to optimization often lies in carefully crafting mathematical models that suit the desired outcomes.

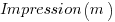

The Pitfall of Overestimating Modeling Functions

In the realm of recommendation engines, it’s not uncommon for teams to overestimate the power of their modeling functions. Let’s examine a common pitfall, especially in the context of Complementary Recommendation, where we focus on estimating  based on co-purchased data.

based on co-purchased data.

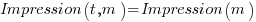

The challenge arises when we attempt to simplify the calculation by replacing  with

with  while also removing the Impression factor to reduce computational complexity. The assumption made here is that

while also removing the Impression factor to reduce computational complexity. The assumption made here is that  , effectively ignoring the co-impression between products t and m.

, effectively ignoring the co-impression between products t and m.

However, this simplification has consequences. By assuming that  , we treat all products equally when it comes to impressions, regardless of their co-impression with other items. This simplification can lead to a significant oversight: it fails to reduce the influence of regularly sold products frequently appearing in all baskets.

, we treat all products equally when it comes to impressions, regardless of their co-impression with other items. This simplification can lead to a significant oversight: it fails to reduce the influence of regularly sold products frequently appearing in all baskets.

As a result, in the realm of Complementary Recommendation, this simplification can skew the recommendations toward products that are heavily sold but may not be the most relevant or complementary choices for users. It’s a classic example of how overestimating the modeling function can lead to suboptimal results and recommendations that miss the mark regarding user satisfaction and engagement.

This cautionary tale underscores the importance of maintaining a balance between modeling sophistication and the realism of assumptions. It’s a reminder that, in the quest to optimize recommendation engines, careful attention to detail and a nuanced understanding of user behavior often yield the most accurate and valuable results.

Conclusion: The Hidden Steps of Recommendation Engine Development

In the intricate world of recommendation engines, the journey to building effective and optimized systems involves several hidden steps that are often overlooked but hold the key to success. Let’s take a moment to recap the essential phases of recommendation engine development, highlighting the significance of these often underestimated steps:

1. Define Recommendation Needs and Clarify Objectives:

The foundation of any recommendation system lies in understanding the specific needs and objectives it aims to fulfill. This initial step sets the direction for the entire development process.

2. Model the Problem and articulate Needs in a Probabilistic Framework:

Crafting a clear, probabilistic problem model is the cornerstone of effective recommendation. It allows us to express the problem mathematically and formulate precise objectives.

3. Prepare and Create a Dataset for Validation Models:

Data is the lifeblood of recommendation engines. Constructing a robust dataset representative of real-world scenarios is essential for training, especially validating models.

4. Design and Define Online and Offline Evaluation Metrics:

Evaluation metrics guide us toward optimized recommendation strategies. Defining them early ensures we can measure and compare model performance accurately.

5. Develop a Model to Solve the Problem:

This step involves the creation of recommendation algorithms and models tailored to the problem’s unique requirements.

6. Evaluate Models on Offline Dataset and Test Hypotheses:

Offline evaluation provides a crucial checkpoint for model performance and hypothesis testing. It allows us to assess how well our models align with defined objectives.

7. Online Test and Evaluate Models:

Real-world online testing is the ultimate proving ground for recommendation systems. It offers insights into user behavior and system impact, helping refine and optimize models.

It’s worth noting that some teams, despite their sophisticated models and efforts, may skip steps 1-4 and 6, opting to develop models and test them directly online (steps 5 and 7). While this approach can expedite the process, it may lead to suboptimal results and hinder the ability to diagnose and improve models. We recommend a more comprehensive approach that encompasses all seven steps. By diligently clarifying needs, articulating problems probabilistically, creating representative datasets, and defining rigorous evaluation metrics, we lay the groundwork for more accurate, effective, and user-centric recommendation engines.

In conclusion, the hidden steps of recommendation engine development serve as a roadmap for achieving not just functional but highly optimized systems that truly meet user needs and business objectives.